TL;DR

- AI can write unit tests for you, but a prompt like "write tests for this code" produces garbage.

- This article shows an AI workflow that actually works using GitHub Copilot's instructions and prompt files.

- You decide which test cases to implement, AI writes them, you review the results.

- The article includes a ready-to-use prompt file and an example repository.

Unit tests are a pain to write. They keep your codebase maintainable, sure, but that doesn’t make writing them less tedious. After all, you’ve just implemented the feature and your code works. But now you have to revisit everything and write tests for it.

The obvious idea: why not let AI handle it? It excels at this type of tedious, repetitive task anyway.

But if you just prompt an LLM to “write tests for this code”, you’ll get more garbage than you’d expect, e.g., useless test cases, tests tied to implementation details, and even worse, false positives.

This article shows you a better way: an AI workflow that uses GitHub Copilot to do the tedious part of the work for you while you maintain control over test cases and quality.

(TDD aficionados: I see you. There’s something for you at the end as well.)

The problem with AI-generated unit tests

Before we talk about the solution, let’s understand the problem first. Why does AI perform so badly when prompted to write unit tests?

LLMs are expensive to run and context windows are limited. Every new conversation with GitHub Copilot starts fresh with no prior knowledge. Copilot also has to make smart choices about how much of your codebase to load into context. Even you might struggle to derive domain logic from a codebase you’re seeing for the first time. Imagine you could only read parts of it.

Current AI is also bad at logical thinking and generalization. It has difficulty deriving intent from code alone and tends to focus on testing the code rather than the underlying requirements. This leads to shallow tests and false positives. AI also struggles with granularity and implements tests for the same requirement repeatedly. Worst of all: sometimes it gets so fixated on making tests pass that it modifies production code.

TL;DR, current AIs:

- don’t have a good overview of the codebase,

- struggle with understanding the business logic,

- produce shallow and redundant tests,

- produce false-positive tests,

- can modify production code instead of fixing failing tests.

That’s a lot of limitations. But there’s a way to work around them.

Solving AI assistant shortcomings

All you need to mitigate these limitations is good instructions for the AI, proper context engineering, and minimal human oversight at the right moments.

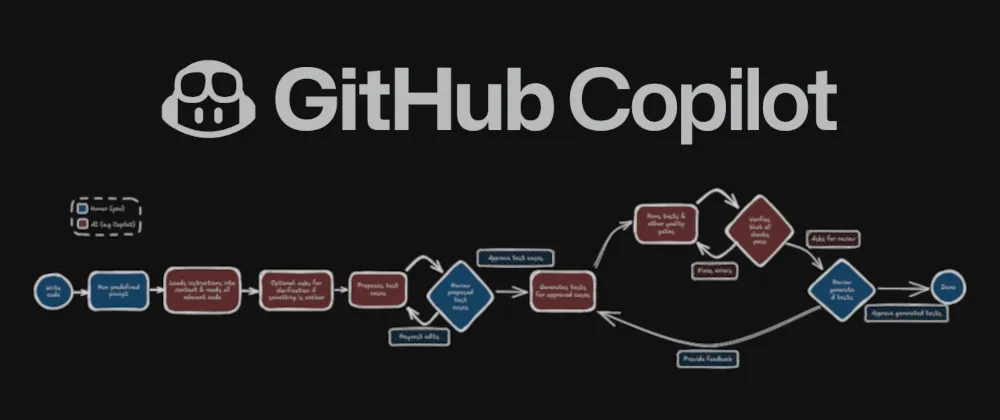

Here’s what it looks like:

You start by running the workflow prompt and telling GitHub Copilot which files to test.

The AI loads all relevant files and instructions into context: repository overview, business domain, architecture, conventions. It reads the files it should test and gathers information while focusing on the underlying business logic. If something is unclear, it asks.

Once ready, the AI proposes test cases. You review them, add new ones, modify or remove existing ones, or approve the proposal. This lets you guide the AI with minimal intervention.

After your approval, the AI gets to work. You can start a new feature, check Slack, get coffee, whatever. GitHub Copilot generates tests for all approved cases, runs them, and iterates until they all pass.

When done, the AI notifies you with a summary. You review the tests, provide feedback if needed, and accept the changes.

You’ll see this whole process in action in a video later. First, let’s set it up.

Setting up the workflow

The setup requires some configuration, but each step is straightforward. I also created an example repository you can use to get started quickly. Here’s what you need to do:

-

Configure Copilot instructions. Copilot performs much better when it understands your project’s conventions, domain, and architecture. Create a

copilot-instructions.mdfile to provide such general context to the AI (see example). -

Add code generation instructions for tests. Code generation instruction files are provided to Copilot right when it’s about to generate code. This is the ideal place to give the AI guidance on writing tests in your codebase (think: naming conventions, testing libraries, mocking patterns, etc.). Create a

.instructions.mdfile with a file pattern that matches your test files and instructions for your specific codebase and testing framework (see example). -

Enable the todo list tool. LLMs typically struggle with multi-step workflows. That’s why GitHub Copilot’s

manage_todo_listtool lets the AI track and execute workflow steps. Make sure it’s enabled by following this guide. -

Configure auto-approval. The workflow needs to run some terminal commands autonomously without constantly asking for permission. For VS Code, set up

.vscode/settings.jsonto auto-approve certain commands (see example). If you’re using a JetBrains IDE, there’s a similar setting. Make sure to be smart about this list and only allow commands that don’t pose a risk if the AI goes astray. -

Create the workflow prompt. This file contains the full workflow description. In the folder

.github/prompts, create the filewrite-unit-tests.prompt.mdand add the following content:---mode: 'agent'description: 'Generate unit tests for the provided code.'---Your task is to write unit tests for the provided code.Use the `manage_todo_list` tool to keep track of the following tasks:1. Analyze context files:- Read all files that have been provided as context.- For each file, briefly summarize its purpose and identify the key business logic and edge cases that require testing.- If anything about the business logic is unclear, ask the user to provide more information.2. Propose test cases:- Based on your analysis, propose a concise list of test case titles (grouped by file/module), e.g.:> A. `MyComponent.tsx`:> 1. should display a greeting when showGreeting is true.> 2. ...> B. `useHelloWorld.ts`:> 1. should ...- Only propose tests for business logic that is relevant and actually requires testing.- Ask the user to review and approve or edit this list.- Do not proceed to implementation until the user explicitly approves the final list.3. Implement approved tests:- Implement exactly the test cases approved by the user. Do not add unapproved tests and do not omit any approved test.4. Run tests and iterate until green:- Run all tests that you added.- If any test fails, first verify whether the failure is due to the test (not the product code).- If the failure is due to the test, fix the test.- If the failure appears to be a product issue, ask the user how to proceed. Do not modify production code without asking the user for consent.- Re-run until all tests pass.5. Check and fix project quality gates:- Run type checks, linters, and any formatting tools configured in the project.- If any check fails, first verify whether the failure comes from one of the test files you edited or if it comes from unrelated code.- If it comes from one of the test files you edited, fix the error.- If it comes from unrelated code, ignore the error.- Re-run checks until they are clean or until only unrelated files cause issues.6. Final verification and report:- Finally, run the full test suite. Confirm that all newly created tests pass.- Provide a very brief summary of what you did.Rules and constraints:- You are only done if **all** the steps have been completed successfully. Otherwise, you must continue.- If critical information is missing, always ask the user.

Using the workflow

Here’s how to run the workflow once everything is configured. The video below shows the workflow in action. You can also follow the written steps underneath.

- Start the workflow.

- Open VS Code and start a new chat in the Copilot chat window.

- Select a capable model. In my experiments, GPT-4o wasn’t quite up for the task and I usually used Claude Sonnet 4.5, but it ultimately depends on your codebase.

- Click the Add Context… button in the Copilot chat input field. Select the files you want the AI to write unit tests for.

- Type

/write-unit-testsinto the Copilot chat.

- Review proposed test cases. The AI will now start gathering context. Once it’s done, it will propose the unit test cases. Carefully review them.

- Approve test cases. Type into the chat window which test cases you’d like to have implemented. You can reference them by number, like “A1, A5, B2”.

- Let the AI work. The AI will get to work. If you have a good allow-list for tool calls, you shouldn’t be bothered until it’s done.

- Final review. After a while, the AI will ask for final approval. Carefully review the written tests and provide feedback where necessary. If you’re happy with the results, accept the changes.

If you want to try out the workflow without setting everything up yourself, feel free to clone the example repository and follow the steps above.

Discussion & recap

Once everything is set up properly, this workflow can be quite powerful. You can one-shot an entire test suite and move on to other work while the AI handles the tedious parts. Getting there takes some trial and error with your instruction files, but I found the experimentation process surprisingly rewarding once results started coming together.

That said, there’s a tradeoff worth considering. Normally, writing tests after code serves as a quality review step. You catch issues while thinking through edge cases. This workflow skips that. The AI can catch bugs during test generation (it happened to me several times) but I wouldn’t count on it. You still need to review the generated tests carefully, and depending on how well-tuned your setup is, this might save you significant time or barely make a dent.

For TDD enthusiasts: you can invert this workflow. Write the tests first and let the AI generate the code to make them pass. Same configuration steps, same human checkpoints, just reversed. If you create a TDD version of the prompt file, I’d love to see it.

Conclusion

Asking an AI to “write tests pls” won’t magically produce quality unit tests. But with the right workflow, proper context, a structured prompt, and two deliberate human checkpoints, you can hand off the tedious work while you control which tests are written and provide the common sense the AI lacks.

It’s not zero-effort. You need to set up instruction files and tune the workflow to your codebase. But you can use the prompt file above and the example repository to get started quickly. If you run into issues or have questions, reach out. I’m curious to hear how this works for different codebases.

I share more coding and AI development tips on X, BlueSky, and LinkedIn.